init

This commit is contained in:

commit

5b1151035c

4

.gitignore

vendored

Normal file

4

.gitignore

vendored

Normal file

@ -0,0 +1,4 @@

|

|||||||

|

node_modules/

|

||||||

|

gdurl.sqlite

|

||||||

|

config.js

|

||||||

|

sa/*.json

|

||||||

3

check.js

Normal file

3

check.js

Normal file

@ -0,0 +1,3 @@

|

|||||||

|

const { ls_folder } = require('./src/gd')

|

||||||

|

|

||||||

|

ls_folder({ fid: 'root' }).then(console.log).catch(console.error)

|

||||||

63

compare.md

Normal file

63

compare.md

Normal file

@ -0,0 +1,63 @@

|

|||||||

|

# 对比本工具和其他类似工具在 server side copy 的速度上的差异

|

||||||

|

|

||||||

|

以拷贝[https://drive.google.com/drive/folders/1W9gf3ReGUboJUah-7XDg5jKXKl5XwQQ3](https://drive.google.com/drive/folders/1W9gf3ReGUboJUah-7XDg5jKXKl5XwQQ3)为例([文件统计](https://gdurl.viegg.com/api/gdrive/count?fid=1W9gf3ReGUboJUah-7XDg5jKXKl5XwQQ3))

|

||||||

|

共 242 个文件和 26 个文件夹

|

||||||

|

|

||||||

|

如无特殊说明,以下运行环境都是在本地命令行(挂代理)

|

||||||

|

|

||||||

|

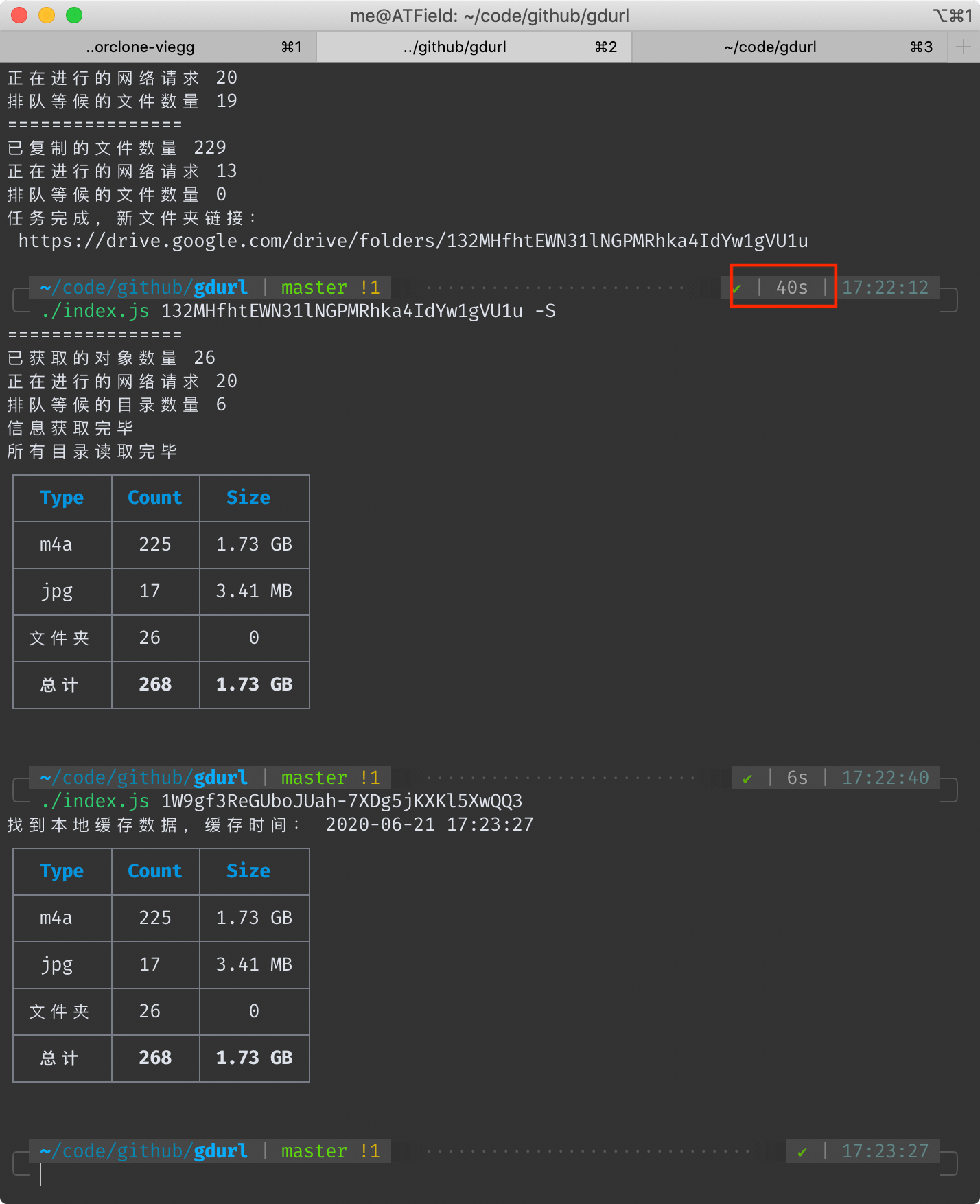

## 本工具耗时 40 秒

|

||||||

|

<!--  -->

|

||||||

|

|

||||||

|

|

||||||

|

另外我在一台洛杉矶的vps上执行相同的命令,耗时23秒。

|

||||||

|

这个速度是在使用本项目默认配置**20个并行请求**得出来的,此值可自行修改(下文有方法),并行请求数越大,总速度越快。

|

||||||

|

|

||||||

|

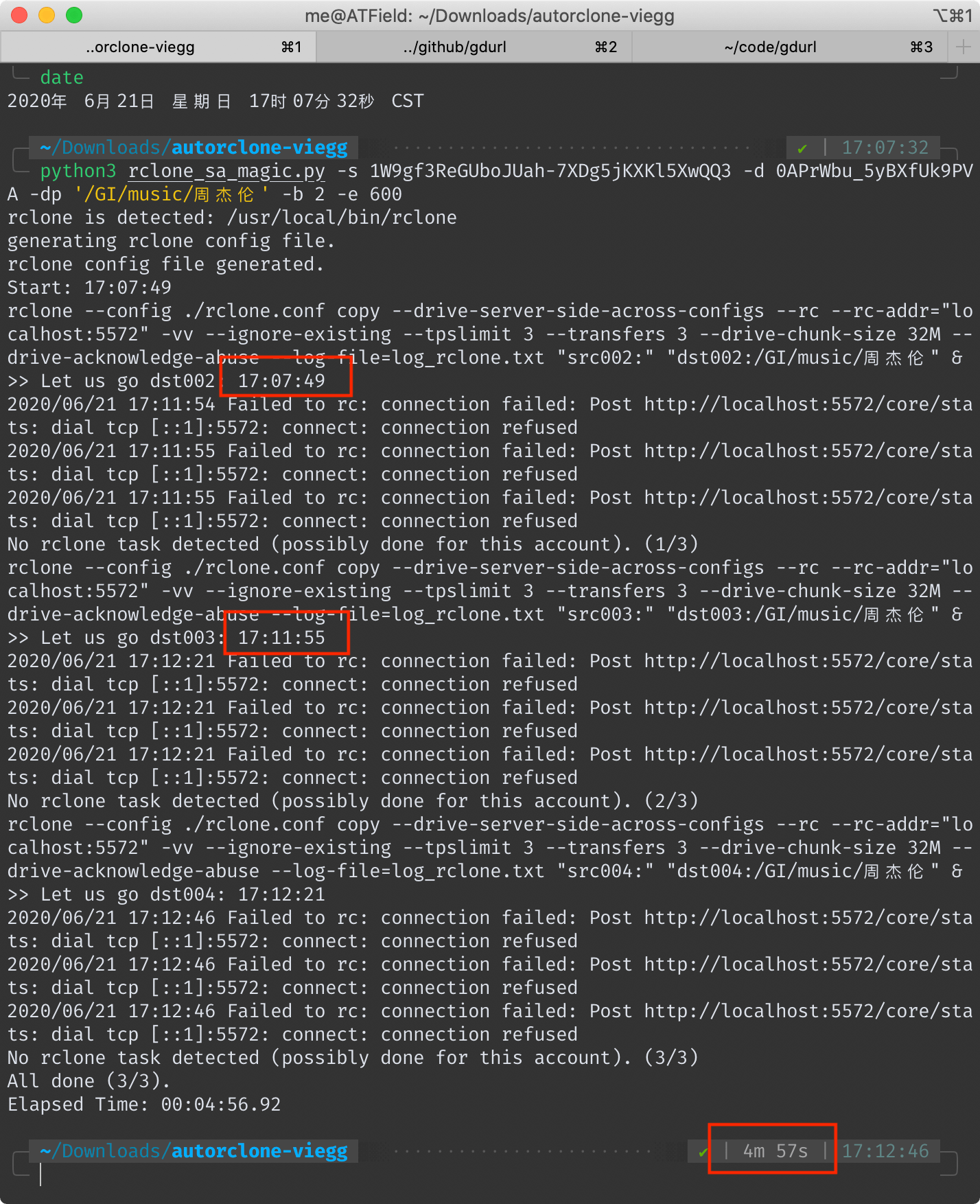

## AutoRclone 耗时 4 分 57 秒(去掉拷贝后验证时间 4 分 6 秒)

|

||||||

|

<!--  -->

|

||||||

|

|

||||||

|

|

||||||

|

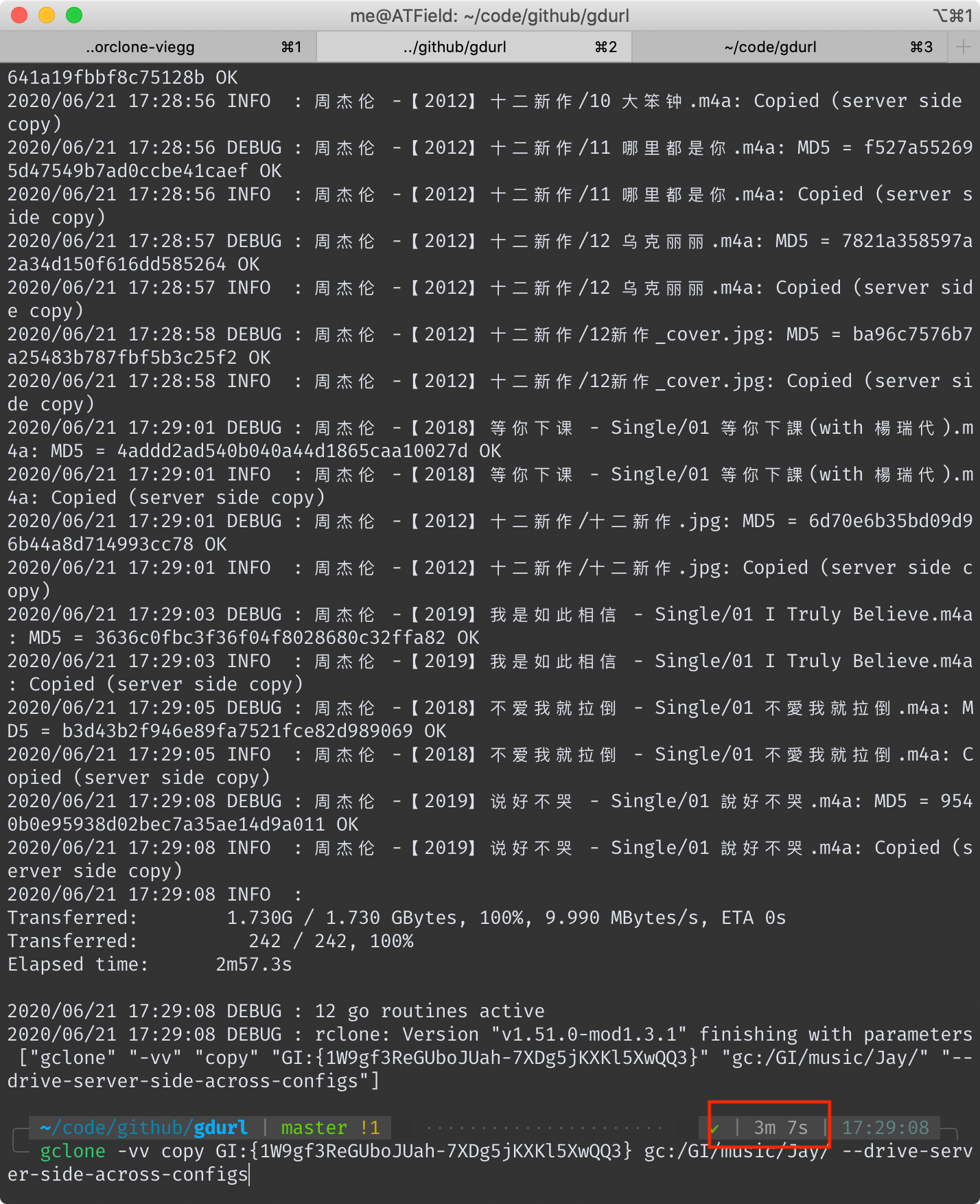

## gclone 耗时 3 分 7 秒

|

||||||

|

<!--  -->

|

||||||

|

|

||||||

|

|

||||||

|

## 为什么速度会有这么大差异

|

||||||

|

首先要明确一下 server side copy(后称ssc) 的原理。

|

||||||

|

|

||||||

|

对于 Google Drive 本身而言,它不会因为你ssc复制了一份文件而真的去在自己的文件系统上复制一遍(否则不管它有多大硬盘都会被填满),它只是在数据库里添上了一笔记录。

|

||||||

|

|

||||||

|

所以,无论ssc一份大文件还是小文件,理论上它的耗时都是一样的。

|

||||||

|

各位在使用这些工具的时候也可以感受到,复制一堆小文件比复制几个大文件要慢得多。

|

||||||

|

|

||||||

|

Google Drive 官方的 API 只提供了复制单个文件的功能,无法直接复制整个文件夹。甚至也无法读取整个文件夹,只能读取某个文件夹的第一层子文件(夹)信息,类似 Linux 命令行里的 `ls` 命令。

|

||||||

|

|

||||||

|

这三个工具的ssc功能,本质上都是对[官方file copy api](https://developers.google.com/drive/api/v3/reference/files/copy)的调用。

|

||||||

|

|

||||||

|

然后说一下本工具的原理,其大概步骤如下:

|

||||||

|

|

||||||

|

- 首先,它会递归读取要复制的目录里的所有文件和文件夹的信息,并保存到本地。

|

||||||

|

- 然后,将所有文件夹对象过滤出来,再根据彼此的父子关系,创建新的同名文件夹,还原出原始结构。(在保证速度的同时保持原始文件夹结构不变,这真的费了一番功夫)

|

||||||

|

- 根据上一步创建文件夹时留下的新旧文件夹ID的对应关系,调用官方API复制文件。

|

||||||

|

|

||||||

|

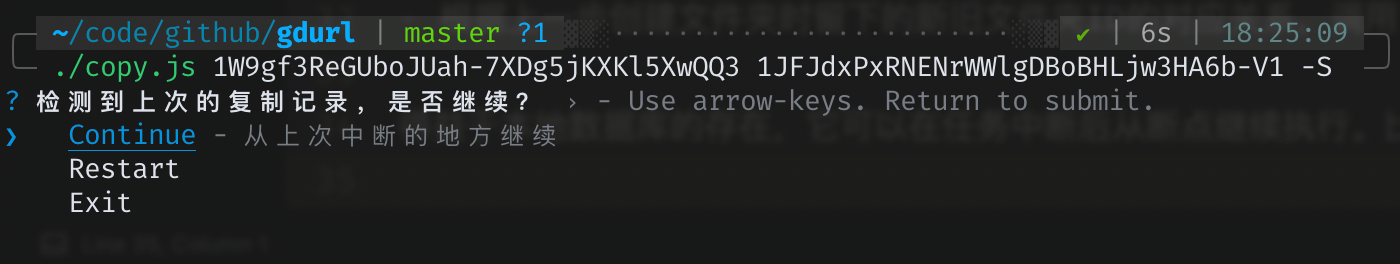

得益于本地数据库的存在,它可以在任务中断后从断点继续执行。比如用户按下`ctrl+c`后,可以再执行一遍相同的拷贝命令,本工具会给出三个选项:

|

||||||

|

<!--  -->

|

||||||

|

|

||||||

|

|

||||||

|

另外两个工具也支持断点续传,它们是怎样做到的呢?AutoRclone是用python对rclone命令的一层封装,gclone是基于rclone的魔改。

|

||||||

|

对了——值得一提的是——本工具是直接调用的官方API,不依赖于rclone。

|

||||||

|

|

||||||

|

我没有仔细阅读过rclone的源码,但是从它的执行日志中可以大概猜出其工作原理。

|

||||||

|

先补充个背景知识:对于存在于Google drive的所有文件(夹)对象,它们的一生都伴随着一个独一无二的ID,就算一个文件是另一个的拷贝,它们的ID也不一样。

|

||||||

|

|

||||||

|

所以rclone是怎么知道哪些文件拷贝过,哪些没有呢?如果它没有像我一样将记录保存在本地数据库的话,那么它只能在同一路径下搜索是否存在同名文件,如果存在,再比对它们的 大小/修改时间/md5值 等判断是否拷贝过。

|

||||||

|

|

||||||

|

也就是说,在最坏的情况下(假设它没做缓存),它每拷贝一个文件之前,都要先调用官方API来搜索判断此文件是否已存在!

|

||||||

|

|

||||||

|

此外,AutoRclone和gclone虽然都支持自动切换service account,但是它们执行拷贝任务的时候都是单一SA在调用API,这就注定了它们不能把请求频率调太高——否则可能触发限制。

|

||||||

|

|

||||||

|

而本工具同样支持自动切换service account,区别在于它的每次请求都是随机选一个SA,我的[文件统计](https://gdurl.viegg.com/api/gdrive/count?fid=1W9gf3ReGUboJUah-7XDg5jKXKl5XwQQ3)接口就用了20个SA的token,同时请求数设置成20个,也就是平均而言,单个SA的并发请求数只有一次。

|

||||||

|

|

||||||

|

所以瓶颈不在于SA的频率限制,而在运行的vps或代理上,各位可以根据各自的情况适当调整 PARALLEL_LIMIT 的值(在 `config.js` 里)。

|

||||||

|

|

||||||

|

当然,如果某个SA的单日流量超过了750G,会自动切换成别的SA,同时过滤掉流量用尽的SA。当所有SA流量用完后,会切换到个人的access token,直到流量同样用尽,最终进程退出。

|

||||||

|

|

||||||

|

*使用SA存在的限制:除了每日流量限制外,其实每个SA还有个**15G的个人盘空间限额**,也就是说你每个SA最多能拷贝15G的文件到个人盘,但是拷贝到团队盘则无此限制。*

|

||||||

24

config.js

Normal file

24

config.js

Normal file

@ -0,0 +1,24 @@

|

|||||||

|

// 单次请求多少毫秒未响应以后超时(基准值,若连续超时则下次调整为上次的2倍)

|

||||||

|

const TIMEOUT_BASE = 7000

|

||||||

|

// 最大超时设置,比如某次请求,第一次7s超时,第二次14s,第三次28s,第四次56s,第五次不是112s而是60s,后续同理

|

||||||

|

const TIMEOUT_MAX = 60000

|

||||||

|

|

||||||

|

const LOG_DELAY = 5000 // 日志输出时间间隔,单位毫秒

|

||||||

|

const PAGE_SIZE = 1000 // 每次网络请求读取目录下的文件数,数值越大,越有可能超时,不得超过1000

|

||||||

|

|

||||||

|

const RETRY_LIMIT = 7 // 如果某次请求失败,允许其重试的最大次数

|

||||||

|

const PARALLEL_LIMIT = 20 // 网络请求的并行数量,可根据网络环境调整

|

||||||

|

|

||||||

|

const DEFAULT_TARGET = '' // 必填,拷贝默认目的地ID,如果不指定target,则会复制到此处,建议填写团队盘ID

|

||||||

|

|

||||||

|

const AUTH = { // 如果您拥有service account的json授权文件,可将其拷贝至 sa 目录中以代替 client_id/secret/refrest_token

|

||||||

|

client_id: 'your_client_id',

|

||||||

|

client_secret: 'your_client_secret',

|

||||||

|

refresh_token: 'your_refrest_token',

|

||||||

|

expires: 0, // 可以留空

|

||||||

|

access_token: '', // 可以留空

|

||||||

|

tg_token: 'bot_token', // 你的 telegram robot 的 token,获取方法参见 https://core.telegram.org/bots#6-botfather

|

||||||

|

tg_whitelist: ['your_tg_username'] // 你的tg username(t.me/username),bot只会执行这个列表里的用户所发送的指令

|

||||||

|

}

|

||||||

|

|

||||||

|

module.exports = { AUTH, PARALLEL_LIMIT, RETRY_LIMIT, TIMEOUT_BASE, TIMEOUT_MAX, LOG_DELAY, PAGE_SIZE, DEFAULT_TARGET }

|

||||||

49

copy

Executable file

49

copy

Executable file

@ -0,0 +1,49 @@

|

|||||||

|

#!/usr/bin/env node

|

||||||

|

|

||||||

|

const bytes = require('bytes')

|

||||||

|

|

||||||

|

const { argv } = require('yargs')

|

||||||

|

.usage('用法: ./$0 <source id> <target id> [options]\ntarget id可选,不填则使用config.js里的DEFAULT_TARGET')

|

||||||

|

.alias('u', 'update')

|

||||||

|

.describe('u', '不使用本地缓存,强制从线上获取源文件夹信息')

|

||||||

|

.alias('f', 'file')

|

||||||

|

.describe('f', '复制单个文件')

|

||||||

|

.alias('n', 'name')

|

||||||

|

.describe('n', '给目标文件夹重命名,不填则保留原始目录名')

|

||||||

|

.alias('N', 'not_teamdrive')

|

||||||

|

.describe('N', '如果不是团队盘链接,可以加上此参数以提高接口查询效率,降低延迟')

|

||||||

|

.alias('s', 'size')

|

||||||

|

.describe('s', '不填默认拷贝全部文件,如果设置了这个值,则过滤掉小于这个size的文件,必须以b结尾,比如10mb')

|

||||||

|

.alias('S', 'service_account')

|

||||||

|

.describe('S', '指定使用service account进行操作,前提是必须在 ./sa 目录下放置json授权文件,请确保sa帐号拥有操作权限。')

|

||||||

|

.help('h')

|

||||||

|

.alias('h', 'help')

|

||||||

|

|

||||||

|

const { copy, copy_file, validate_fid } = require('./src/gd')

|

||||||

|

const { DEFAULT_TARGET } = require('./config')

|

||||||

|

|

||||||

|

let [source, target] = argv._

|

||||||

|

|

||||||

|

if (validate_fid(source)) {

|

||||||

|

const { name, update, file, not_teamdrive, size, service_account } = argv

|

||||||

|

if (file) {

|

||||||

|

target = target || DEFAULT_TARGET

|

||||||

|

if (!validate_fid(target)) throw new Error('target id 格式不正确')

|

||||||

|

return copy_file(source, target).then(r => {

|

||||||

|

const link = 'https://drive.google.com/drive/folders/' + target

|

||||||

|

console.log('任务完成,文件所在位置:\n', link)

|

||||||

|

}).catch(console.error)

|

||||||

|

}

|

||||||

|

let min_size

|

||||||

|

if (size) {

|

||||||

|

console.log(`不复制大小低于 ${size} 的文件`)

|

||||||

|

min_size = bytes.parse(size)

|

||||||

|

}

|

||||||

|

copy({ source, target, name, min_size, update, not_teamdrive, service_account }).then(folder => {

|

||||||

|

if (!folder) return

|

||||||

|

const link = 'https://drive.google.com/drive/folders/' + folder.id

|

||||||

|

console.log('任务完成,新文件夹链接:\n', link)

|

||||||

|

})

|

||||||

|

} else {

|

||||||

|

console.warn('目录ID缺失或格式错误')

|

||||||

|

}

|

||||||

31

count

Executable file

31

count

Executable file

@ -0,0 +1,31 @@

|

|||||||

|

#!/usr/bin/env node

|

||||||

|

|

||||||

|

const { argv } = require('yargs')

|

||||||

|

.usage('用法: ./$0 <目录ID> [options]')

|

||||||

|

.example('./$0 1ULY8ISgWSOVc0UrzejykVgXfVL_I4r75', '获取 https://drive.google.com/drive/folders/1ULY8ISgWSOVc0UrzejykVgXfVL_I4r75 内包含的的所有文件的统计信息')

|

||||||

|

.example('./$0 root -s size -t html -o out.html', '获取个人盘根目录统计信息,结果以HTML表格输出,根据总大小逆序排列,保存到本目录下的out.html文件中(不存在则新建,存在则覆盖)')

|

||||||

|

.example('./$0 root -s name -t json -o out.json', '获取个人盘根目录统计信息,结果以JSON格式输出,根据文件扩展名排序,保存到本目录下的out.json文件中')

|

||||||

|

.example('./$0 root -t all -o all.json', '获取个人盘根目录统计信息,将所有文件信息(包括文件夹)以JSON格式输出,保存到本目录下的all.json文件中')

|

||||||

|

.alias('u', 'update')

|

||||||

|

.describe('u', '强制从线上获取信息(无视是否存在本地缓存)')

|

||||||

|

.alias('N', 'not_teamdrive')

|

||||||

|

.describe('N', '如果不是团队盘链接,可以加上此参数以提高接口查询效率,降低延迟。如果要统计的是个人盘且./sa目录下的service account没有相关权限,请确保加上此参数以使用个人的auth信息进行查询')

|

||||||

|

.alias('S', 'service_account')

|

||||||

|

.describe('S', '指定使用service account进行统计,前提是必须在sa目录下放置SA json文件')

|

||||||

|

.alias('s', 'sort')

|

||||||

|

.describe('s', '统计结果排序方法,可选值 name 或 size,不填则默认根据文件数量逆序排列')

|

||||||

|

.alias('t', 'type')

|

||||||

|

.describe('t', '统计结果输出类型,可选值 html/json/all,all表示输出所有文件json数据,最好搭配 -o 使用。不填则默认输出命令行表格')

|

||||||

|

.alias('o', 'output')

|

||||||

|

.describe('o', '统计结果输出文件,适合搭配 -t 使用')

|

||||||

|

.help('h')

|

||||||

|

.alias('h', 'help')

|

||||||

|

|

||||||

|

const { count, validate_fid } = require('./src/gd')

|

||||||

|

const [fid] = argv._

|

||||||

|

if (validate_fid(fid)) {

|

||||||

|

const { update, sort, type, output, not_teamdrive, service_account } = argv

|

||||||

|

count({ fid, update, sort, type, output, not_teamdrive, service_account }).catch(console.error)

|

||||||

|

} else {

|

||||||

|

console.warn('目录ID缺失或格式错误')

|

||||||

|

}

|

||||||

29

create-table.sql

Normal file

29

create-table.sql

Normal file

@ -0,0 +1,29 @@

|

|||||||

|

CREATE TABLE "gd" (

|

||||||

|

"id" INTEGER NOT NULL PRIMARY KEY AUTOINCREMENT UNIQUE,

|

||||||

|

"fid" TEXT NOT NULL UNIQUE,

|

||||||

|

"info" TEXT,

|

||||||

|

"summary" TEXT,

|

||||||

|

"subf" TEXT,

|

||||||

|

"ctime" INTEGER,

|

||||||

|

"mtime" INTEGER

|

||||||

|

);

|

||||||

|

|

||||||

|

CREATE UNIQUE INDEX "gd_fid" ON "gd" (

|

||||||

|

"fid"

|

||||||

|

);

|

||||||

|

|

||||||

|

CREATE TABLE "task" (

|

||||||

|

"id" INTEGER NOT NULL PRIMARY KEY AUTOINCREMENT UNIQUE,

|

||||||

|

"source" TEXT NOT NULL,

|

||||||

|

"target" TEXT NOT NULL,

|

||||||

|

"status" TEXT,

|

||||||

|

"copied" TEXT DEFAULT '',

|

||||||

|

"mapping" TEXT DEFAULT '',

|

||||||

|

"ctime" INTEGER,

|

||||||

|

"ftime" INTEGER

|

||||||

|

);

|

||||||

|

|

||||||

|

CREATE UNIQUE INDEX "task_source_target" ON "task" (

|

||||||

|

"source",

|

||||||

|

"target"

|

||||||

|

);

|

||||||

5

db.js

Normal file

5

db.js

Normal file

@ -0,0 +1,5 @@

|

|||||||

|

const path = require('path')

|

||||||

|

const db_location = path.join(__dirname, 'gdurl.sqlite')

|

||||||

|

const db = require('better-sqlite3')(db_location)

|

||||||

|

|

||||||

|

module.exports = { db }

|

||||||

24

dedupe

Executable file

24

dedupe

Executable file

@ -0,0 +1,24 @@

|

|||||||

|

#!/usr/bin/env node

|

||||||

|

|

||||||

|

const { argv } = require('yargs')

|

||||||

|

.usage('用法: ./$0 <source id> <target id> [options]')

|

||||||

|

.alias('u', 'update')

|

||||||

|

.describe('u', '不使用本地缓存,强制从线上获取源文件夹信息')

|

||||||

|

.alias('S', 'service_account')

|

||||||

|

.describe('S', '使用service account进行操作,前提是必须在 ./sa 目录下放置sa授权json文件')

|

||||||

|

.help('h')

|

||||||

|

.alias('h', 'help')

|

||||||

|

|

||||||

|

const { dedupe, validate_fid } = require('./src/gd')

|

||||||

|

|

||||||

|

const [fid] = argv._

|

||||||

|

if (validate_fid(fid)) {

|

||||||

|

const { update, service_account } = argv

|

||||||

|

dedupe({ fid, update, service_account }).then(info => {

|

||||||

|

if (!info) return

|

||||||

|

const { file_count, folder_count } = info

|

||||||

|

console.log('任务完成,共删除文件数:', file_count, '目录数:', folder_count)

|

||||||

|

})

|

||||||

|

} else {

|

||||||

|

console.warn('目录ID缺失或格式错误')

|

||||||

|

}

|

||||||

BIN

gdurl.sqlite

Normal file

BIN

gdurl.sqlite

Normal file

Binary file not shown.

1340

package-lock.json

generated

Normal file

1340

package-lock.json

generated

Normal file

File diff suppressed because it is too large

Load Diff

31

package.json

Normal file

31

package.json

Normal file

@ -0,0 +1,31 @@

|

|||||||

|

{

|

||||||

|

"name": "gd-utils",

|

||||||

|

"version": "1.0.0",

|

||||||

|

"description": "google drive utils",

|

||||||

|

"main": "src/gd.js",

|

||||||

|

"scripts": {

|

||||||

|

"start": "https_proxy='http://127.0.0.1:1086' nodemon server.js",

|

||||||

|

"test": "echo \"Error: no test specified\" && exit 1"

|

||||||

|

},

|

||||||

|

"keywords": [],

|

||||||

|

"author": "viegg",

|

||||||

|

"license": "ISC",

|

||||||

|

"dependencies": {

|

||||||

|

"@koa/router": "^9.0.1",

|

||||||

|

"@viegg/axios": "^1.0.0",

|

||||||

|

"better-sqlite3": "^7.1.0",

|

||||||

|

"bytes": "^3.1.0",

|

||||||

|

"cli-table3": "^0.6.0",

|

||||||

|

"colors": "^1.4.0",

|

||||||

|

"dayjs": "^1.8.28",

|

||||||

|

"gtoken": "^5.0.1",

|

||||||

|

"html-escaper": "^3.0.0",

|

||||||

|

"https-proxy-agent": "^5.0.0",

|

||||||

|

"koa": "^2.13.0",

|

||||||

|

"koa-bodyparser": "^4.3.0",

|

||||||

|

"p-limit": "^3.0.1",

|

||||||

|

"prompts": "^2.3.2",

|

||||||

|

"signal-exit": "^3.0.3",

|

||||||

|

"yargs": "^15.3.1"

|

||||||

|

}

|

||||||

|

}

|

||||||

115

readme.md

Normal file

115

readme.md

Normal file

@ -0,0 +1,115 @@

|

|||||||

|

# Google Drive 百宝箱

|

||||||

|

|

||||||

|

> 不只是最快的 google drive 拷贝工具 [与其他工具的对比](./compare.md)

|

||||||

|

|

||||||

|

## 功能简介

|

||||||

|

本工具目前支持以下功能:

|

||||||

|

- 统计任意(您拥有相关权限的,下同,不再赘述)目录的文件信息,且支持以各种形式(html, table, json)导出。

|

||||||

|

支持中断恢复,且统计过的目录信息会记录在本地数据库文件中(gdurl.sqlite)

|

||||||

|

请在本项目目录下命令行输入 `./count -h` 查看使用帮助

|

||||||

|

|

||||||

|

- 拷贝任意目录所有文件到您指定目录,同样支持中断恢复。

|

||||||

|

支持根据文件大小过滤,可输入 `./copy -h` 查看使用帮助

|

||||||

|

|

||||||

|

- 对任意目录进行去重,删除同一目录下的md5值相同的文件(只保留一个),删除空目录。

|

||||||

|

命令行输入 `./dedupe -h` 查看使用帮助

|

||||||

|

|

||||||

|

- 在 config.js 里完成相关配置后,可以将本项目部署在(可正常访问谷歌服务的)服务器上,提供 http api 文件统计接口

|

||||||

|

|

||||||

|

- 支持 telegram bot,配置完成后,上述功能均可通过 bot 进行操作

|

||||||

|

|

||||||

|

## demo

|

||||||

|

[https://drive.google.com/drive/folders/124pjM5LggSuwI1n40bcD5tQ13wS0M6wg](https://drive.google.com/drive/folders/124pjM5LggSuwI1n40bcD5tQ13wS0M6wg)

|

||||||

|

|

||||||

|

## 环境配置

|

||||||

|

本工具需要安装nodejs,客户端安装请访问[https://nodejs.org/zh-cn/download/](https://nodejs.org/zh-cn/download/),服务器安装可参考[https://github.com/nodesource/distributions/blob/master/README.md#debinstall](https://github.com/nodesource/distributions/blob/master/README.md#debinstall)

|

||||||

|

|

||||||

|

如果你的网络环境无法正常访问谷歌服务,需要先在命令行进行一些配置:(如果可以正常访问则跳过此节)

|

||||||

|

```

|

||||||

|

http_proxy="YOUR_PROXY_URL" && https_proxy=$http_proxy && HTTP_PROXY=$http_proxy && HTTPS_PROXY=$http_proxy

|

||||||

|

```

|

||||||

|

请把`YOUR_PROXY_URL`替换成你自己的代理地址

|

||||||

|

|

||||||

|

## 依赖安装

|

||||||

|

- 命令行执行`git clone https://github.com/iwestlin/gdurl && cd gdurl` 克隆并切换到本项目文件夹下

|

||||||

|

- 执行 `npm i` 安装依赖,部分依赖可能需要代理环境才能下载,所以需要上一步的配置

|

||||||

|

|

||||||

|

如果在安装过程中发生报错,请切换nodejs版本到v12再试。如果报错信息里有`Error: not found: make`之类的消息,说明你的命令行环境缺少make命令,可参考[这里](https://askubuntu.com/questions/192645/make-command-not-found)或直接google搜索`Make Command Not Found`

|

||||||

|

|

||||||

|

依赖安装完成后,项目文件夹下会多出个`node_modules`目录,请不要删除它,接下来进行下一步配置。

|

||||||

|

|

||||||

|

## Service Account 配置

|

||||||

|

强烈建议使用service account(后称SA), 获取方法请参见 [https://gsuitems.com/index.php/archives/13/](https://gsuitems.com/index.php/archives/13/#%E6%AD%A5%E9%AA%A42%E7%94%9F%E6%88%90serviceaccounts)

|

||||||

|

获取到 SA 的 json 文件后,请将其拷贝到 `sa` 目录下

|

||||||

|

|

||||||

|

配置好 SA 以后,如果你不需要对个人盘下的文件进行操作,可跳过[个人帐号配置]这节,而且执行命令的时候,记得带上 `-S` 参数告诉程序使用SA授权进行操作。

|

||||||

|

|

||||||

|

## 个人帐号配置

|

||||||

|

- 命令行执行 `rclone config file` 找到 rclone 的配置文件路径

|

||||||

|

- 打开这个配置文件 `rclone.conf`, 找到 `client_id`, `client_secret` 和 `refresh_token` 这三个变量,将其分别填入本项目下的 `config.js` 中,需要注意这三个值必须被成对的英文引号包裹,且引号后以英文逗号结尾,也就是需要符合JavaScript的[对象语法](https://developer.mozilla.org/zh-CN/docs/Web/JavaScript/Reference/Operators/Object_initializer)

|

||||||

|

|

||||||

|

如果你没有配置过rclone,可以搜索`rclone google drive 教程`完成相关配置。

|

||||||

|

|

||||||

|

如果你的`rclone.conf`里没有`client_id`和`client_secret`,说明你配置rclone的时候默认用了rclone自己的client_id,连rclone自己[都不建议这样做](https://github.com/rclone/rclone/blob/8d55367a6a2f47a1be7e360a872bd7e56f4353df/docs/content/drive.md#making-your-own-client_id),因为大家共享了它的接口调用限额,在使用高峰期可能会触发限制。

|

||||||

|

|

||||||

|

获取自己的clinet_id可以参见这两篇文章:[Cloudbox/wiki/Google-Drive-API-Client-ID-and-Client-Secret](https://github.com/Cloudbox/Cloudbox/wiki/Google-Drive-API-Client-ID-and-Client-Secret) 和 [https://p3terx.com/archives/goindex-google-drive-directory-index.html#toc_2](https://p3terx.com/archives/goindex-google-drive-directory-index.html#toc_2)

|

||||||

|

|

||||||

|

获取到client_id和client_secret后,再次执行一遍`rclone config`,创建一个新的remote,**在配置过程中一定要填入你新获取的clinet_id和client_secret**,就能在`rclone.conf`里看到新获取的`refresh_token`了。**注意,不能使用之前的refrest_token**,因为它对应的是rclone自带的client_id

|

||||||

|

|

||||||

|

参数配置好以后,在命令行执行 `node check.js`,如果命令返回了你的谷歌硬盘根目录的数据,说明配置成功,可以开始使用本工具了。

|

||||||

|

|

||||||

|

## Bot配置

|

||||||

|

如果要使用 telegram bot 功能,需要进一步配置。

|

||||||

|

|

||||||

|

首先在 [https://core.telegram.org/bots#6-botfather](https://core.telegram.org/bots#6-botfather) 根据指示拿到 bot 的 token,然后填入 config.js 中的 `tg_token` 变量。

|

||||||

|

|

||||||

|

接下来需要将代码部署到服务器上。

|

||||||

|

将配置好的项目文件夹打包上传到服务器,解压后进入项目目录,执行`npm i pm2 -g`(需要先安装nodejs)

|

||||||

|

|

||||||

|

安装好pm2之后,执行 `pm2 start server.js`,代码运行后会在服务器上监听`23333`端口,接下来可通过nginx或其他工具起一个web服务,示例nginx配置:

|

||||||

|

```

|

||||||

|

server {

|

||||||

|

listen 80;

|

||||||

|

server_name your.server.name;

|

||||||

|

|

||||||

|

location / {

|

||||||

|

proxy_set_header Host $host;

|

||||||

|

proxy_set_header X-Real-IP $remote_addr;

|

||||||

|

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

|

||||||

|

proxy_pass http://127.0.0.1:23333/;

|

||||||

|

}

|

||||||

|

}

|

||||||

|

```

|

||||||

|

配置好nginx后,可以再套一层cloudflare,具体教程请自行搜索。

|

||||||

|

|

||||||

|

最后,在命令行执行(请将[YOUR_WEBSITE]和[YOUR_BOT_TOKEN]分别替换成你自己的网址和bot token)

|

||||||

|

```

|

||||||

|

curl -F "url=[YOUR_WEBSITE]/api/gdurl/tgbot" 'https://api.telegram.org/bot[YOUR_BOT_TOKEN]/setWebhook'

|

||||||

|

```

|

||||||

|

这样,就将你的服务器连接上你的 telegram bot 了,试着给bot发送个 `/help`,如果它回复给你使用说明,那就配置成功了。

|

||||||

|

|

||||||

|

## 补充说明

|

||||||

|

在`config.js`文件里,还有另外的几个参数:

|

||||||

|

```

|

||||||

|

// 单次请求多少毫秒未响应以后超时(基准值,若连续超时则下次调整为上次的2倍)

|

||||||

|

const TIMEOUT_BASE = 7000

|

||||||

|

|

||||||

|

// 最大超时设置,比如某次请求,第一次7s超时,第二次14s,第三次28s,第四次56s,第五次不是112s而是60s,后续同理

|

||||||

|

const TIMEOUT_MAX = 60000

|

||||||

|

|

||||||

|

const LOG_DELAY = 5000 // 日志输出时间间隔,单位毫秒

|

||||||

|

const PAGE_SIZE = 1000 // 每次网络请求读取目录下的文件数,数值越大,越有可能超时,不得超过1000

|

||||||

|

|

||||||

|

const RETRY_LIMIT = 7 // 如果某次请求失败,允许其重试的最大次数

|

||||||

|

const PARALLEL_LIMIT = 20 // 网络请求的并行数量,可根据网络环境调整

|

||||||

|

|

||||||

|

const DEFAULT_TARGET = '' // 必填,拷贝默认目的地ID,如果不指定target,则会拷贝到此处,建议填写团队盘ID,注意要用英文引号包裹

|

||||||

|

```

|

||||||

|

读者可根据各自情况进行调整

|

||||||

|

|

||||||

|

## 注意事项

|

||||||

|

程序的原理是调用了[google drive官方接口](https://developers.google.com/drive/api/v3/reference/files/list),递归获取目标文件夹下所有文件及其子文件夹信息,粗略来讲,某个目录下包含多少个文件夹,就至少需要这么多次请求才能统计完成。

|

||||||

|

|

||||||

|

目前尚不知道google是否会对接口做频率限制,也不知道会不会影响google账号本身的安全。

|

||||||

|

|

||||||

|

**请勿滥用,后果自负**

|

||||||

31

server.js

Normal file

31

server.js

Normal file

@ -0,0 +1,31 @@

|

|||||||

|

const dayjs = require('dayjs')

|

||||||

|

const Koa = require('koa')

|

||||||

|

const bodyParser = require('koa-bodyparser')

|

||||||

|

|

||||||

|

const router = require('./src/router')

|

||||||

|

|

||||||

|

const app = new Koa()

|

||||||

|

app.proxy = true

|

||||||

|

|

||||||

|

app.use(catcher)

|

||||||

|

app.use(bodyParser())

|

||||||

|

app.use(router.routes())

|

||||||

|

app.use(router.allowedMethods())

|

||||||

|

|

||||||

|

app.use(ctx => {

|

||||||

|

ctx.status = 404

|

||||||

|

ctx.body = 'not found'

|

||||||

|

})

|

||||||

|

|

||||||

|

const PORT = 23333

|

||||||

|

app.listen(PORT, '127.0.0.1', console.log('http://127.0.0.1:' + PORT))

|

||||||

|

|

||||||

|

async function catcher (ctx, next) {

|

||||||

|

try {

|

||||||

|

return await next()

|

||||||

|

} catch (e) {

|

||||||

|

console.error(e)

|

||||||

|

ctx.status = 500

|

||||||

|

ctx.body = e.message

|

||||||

|

}

|

||||||

|

}

|

||||||

676

src/gd.js

Normal file

676

src/gd.js

Normal file

@ -0,0 +1,676 @@

|

|||||||

|

const fs = require('fs')

|

||||||

|

const path = require('path')

|

||||||

|

const dayjs = require('dayjs')

|

||||||

|

const prompts = require('prompts')

|

||||||

|

const pLimit = require('p-limit')

|

||||||

|

const axios = require('@viegg/axios')

|

||||||

|

const HttpsProxyAgent = require('https-proxy-agent')

|

||||||

|

const { GoogleToken } = require('gtoken')

|

||||||

|

const handle_exit = require('signal-exit')

|

||||||

|

|

||||||

|

const { AUTH, RETRY_LIMIT, PARALLEL_LIMIT, TIMEOUT_BASE, TIMEOUT_MAX, LOG_DELAY, PAGE_SIZE, DEFAULT_TARGET } = require('../config')

|

||||||

|

const { db } = require('../db')

|

||||||

|

const { make_table, make_tg_table, make_html, summary } = require('./summary')

|

||||||

|

|

||||||

|

const FOLDER_TYPE = 'application/vnd.google-apps.folder'

|

||||||

|

const { https_proxy } = process.env

|

||||||

|

const axins = axios.create(https_proxy ? { httpsAgent: new HttpsProxyAgent(https_proxy) } : {})

|

||||||

|

|

||||||

|

const sa_files = fs.readdirSync(path.join(__dirname, '../sa')).filter(v => v.endsWith('.json'))

|

||||||

|

let SA_TOKENS = sa_files.map(filename => {

|

||||||

|

const gtoken = new GoogleToken({

|

||||||

|

keyFile: path.join(__dirname, '../sa', filename),

|

||||||

|

scope: ['https://www.googleapis.com/auth/drive']

|

||||||

|

})

|

||||||

|

return { gtoken, expires: 0 }

|

||||||

|

})

|

||||||

|

|

||||||

|

handle_exit(() => {

|

||||||

|

// console.log('handle_exit running')

|

||||||

|

const records = db.prepare('select id from task where status=?').all('copying')

|

||||||

|

records.forEach(v => {

|

||||||

|

db.prepare('update task set status=? where id=?').run('interrupt', v.id)

|

||||||

|

})

|

||||||

|

records.length && console.log(records.length, 'task interrupted')

|

||||||

|

})

|

||||||

|

|

||||||

|

async function gen_count_body ({ fid, type, update, service_account }) {

|

||||||

|

async function update_info () {

|

||||||

|

const info = await walk_and_save({ fid, update, service_account }) // 这一步已经将fid记录存入数据库中了

|

||||||

|

const { summary } = db.prepare('SELECT summary from gd WHERE fid=?').get(fid)

|

||||||

|

return [info, JSON.parse(summary)]

|

||||||

|

}

|

||||||

|

|

||||||

|

function render_smy (smy, type) {

|

||||||

|

if (['html', 'curl', 'tg'].includes(type)) {

|

||||||

|

smy = (typeof smy === 'object') ? smy : JSON.parse(smy)

|

||||||

|

const type_func = {

|

||||||

|

html: make_html,

|

||||||

|

curl: make_table,

|

||||||

|

tg: make_tg_table

|

||||||

|

}

|

||||||

|

return type_func[type](smy)

|

||||||

|

} else { // 默认输出json

|

||||||

|

return (typeof smy === 'string') ? smy : JSON.stringify(smy)

|

||||||

|

}

|

||||||

|

}

|

||||||

|

|

||||||

|

let info, smy

|

||||||

|

const record = db.prepare('SELECT * FROM gd WHERE fid = ?').get(fid)

|

||||||

|

if (!record || update) {

|

||||||

|

[info, smy] = await update_info()

|

||||||

|

}

|

||||||

|

if (type === 'all') {

|

||||||

|

info = info || get_all_by_fid(fid)

|

||||||

|

if (!info) { // 说明上次统计过程中断了

|

||||||

|

[info] = await update_info()

|

||||||

|

}

|

||||||

|

return JSON.stringify(info)

|

||||||

|

}

|

||||||

|

if (smy) return render_smy(smy, type)

|

||||||

|

if (record && record.summary) return render_smy(record.summary, type)

|

||||||

|

info = info || get_all_by_fid(fid)

|

||||||

|

if (info) {

|

||||||

|

smy = summary(info)

|

||||||

|

} else {

|

||||||

|

[info, smy] = await update_info()

|

||||||

|

}

|

||||||

|

return render_smy(smy, type)

|

||||||

|

}

|

||||||

|

|

||||||

|

async function count ({ fid, update, sort, type, output, not_teamdrive, service_account }) {

|

||||||

|

sort = (sort || '').toLowerCase()

|

||||||

|

type = (type || '').toLowerCase()

|

||||||

|

output = (output || '').toLowerCase()

|

||||||

|

if (!update) {

|

||||||

|

const info = get_all_by_fid(fid)

|

||||||

|

if (info) {

|

||||||

|

console.log('找到本地缓存数据,缓存时间:', dayjs(info.mtime).format('YYYY-MM-DD HH:mm:ss'))

|

||||||

|

const out_str = get_out_str({ info, type, sort })

|

||||||

|

if (output) return fs.writeFileSync(output, out_str)

|

||||||

|

return console.log(out_str)

|

||||||

|

}

|

||||||

|

}

|

||||||

|

const result = await walk_and_save({ fid, not_teamdrive, update, service_account })

|

||||||

|

const out_str = get_out_str({ info: result, type, sort })

|

||||||

|

if (output) {

|

||||||

|

fs.writeFileSync(output, out_str)

|

||||||

|

} else {

|

||||||

|

console.log(out_str)

|

||||||

|

}

|

||||||

|

}

|

||||||

|

|

||||||

|

function get_out_str ({ info, type, sort }) {

|

||||||

|

const smy = summary(info, sort)

|

||||||

|

let out_str

|

||||||

|

if (type === 'html') {

|

||||||

|

out_str = make_html(smy)

|

||||||

|

} else if (type === 'json') {

|

||||||

|

out_str = JSON.stringify(smy)

|

||||||

|

} else if (type === 'all') {

|

||||||

|

out_str = JSON.stringify(info)

|

||||||

|

} else {

|

||||||

|

out_str = make_table(smy)

|

||||||

|

}

|

||||||

|

return out_str

|

||||||

|

}

|

||||||

|

|

||||||

|

function get_all_by_fid (fid) {

|

||||||

|

const record = db.prepare('SELECT * FROM gd WHERE fid = ?').get(fid)

|

||||||

|

if (!record) return null

|

||||||

|

const { info, subf } = record

|

||||||

|

let result = JSON.parse(info)

|

||||||

|

result = result.map(v => {

|

||||||

|

v.parent = fid

|

||||||

|

return v

|

||||||

|

})

|

||||||

|

if (!subf) return result

|

||||||

|

return recur(result, JSON.parse(subf))

|

||||||

|

|

||||||

|

function recur (result, subf) {

|

||||||

|

if (!subf.length) return result

|

||||||

|

const arr = subf.map(v => {

|

||||||

|

const row = db.prepare('SELECT * FROM gd WHERE fid = ?').get(v)

|

||||||

|

if (!row) return null // 如果没找到对应的fid记录,说明上次中断了进程或目录读取未完成

|

||||||

|

let info = JSON.parse(row.info)

|

||||||

|

info = info.map(vv => {

|

||||||

|

vv.parent = v

|

||||||

|

return vv

|

||||||

|

})

|

||||||

|

return { info, subf: JSON.parse(row.subf) }

|

||||||

|

})

|

||||||

|

if (arr.some(v => v === null)) return null

|

||||||

|

const sub_subf = [].concat(...arr.map(v => v.subf).filter(v => v))

|

||||||

|

result = result.concat(...arr.map(v => v.info))

|

||||||

|

return recur(result, sub_subf)

|

||||||

|

}

|

||||||

|

}

|

||||||

|

|

||||||

|

async function walk_and_save ({ fid, not_teamdrive, update, service_account }) {

|

||||||

|

const result = []

|

||||||

|

const not_finished = []

|

||||||

|

const limit = pLimit(PARALLEL_LIMIT)

|

||||||

|

|

||||||

|

const loop = setInterval(() => {

|

||||||

|

console.log('================')

|

||||||

|

console.log('已获取的对象数量', result.length)

|

||||||

|

console.log('正在进行的网络请求', limit.activeCount)

|

||||||

|

console.log('排队等候的目录数量', limit.pendingCount)

|

||||||

|

}, LOG_DELAY)

|

||||||

|

|

||||||

|

async function recur (parent) {

|

||||||

|

let files, should_save

|

||||||

|

if (update) {

|

||||||

|

files = await limit(() => ls_folder({ fid: parent, not_teamdrive, service_account }))

|

||||||

|

should_save = true

|

||||||

|

} else {

|

||||||

|

const record = db.prepare('SELECT * FROM gd WHERE fid = ?').get(parent)

|

||||||

|

if (record) {

|

||||||

|

files = JSON.parse(record.info)

|

||||||

|

} else {

|

||||||

|

files = await limit(() => ls_folder({ fid: parent, not_teamdrive, service_account }))

|

||||||

|

should_save = true

|

||||||

|

}

|

||||||

|

}

|

||||||

|

if (!files) return

|

||||||

|

if (files.not_finished) not_finished.push(parent)

|

||||||

|

should_save && save_files_to_db(parent, files)

|

||||||

|

const folders = files.filter(v => v.mimeType === FOLDER_TYPE)

|

||||||

|

files.forEach(v => v.parent = parent)

|

||||||

|

result.push(...files)

|

||||||

|

return Promise.all(folders.map(v => recur(v.id)))

|

||||||

|

}

|

||||||

|

await recur(fid)

|

||||||

|

console.log('信息获取完毕')

|

||||||

|

not_finished.length ? console.log('未读取完毕的目录ID:', JSON.stringify(not_finished)) : console.log('所有目录读取完毕')

|

||||||

|

clearInterval(loop)

|

||||||

|

const smy = summary(result)

|

||||||

|

db.prepare('UPDATE gd SET summary=?, mtime=? WHERE fid=?').run(JSON.stringify(smy), Date.now(), fid)

|

||||||

|

return result

|

||||||

|

}

|

||||||

|

|

||||||

|

function save_files_to_db (fid, files) {

|

||||||

|

// 不保存请求未完成的目录,那么下次调用get_all_by_id会返回null,从而再次调用walk_and_save试图完成此目录的请求

|

||||||

|

if (files.not_finished) return

|

||||||

|

let subf = files.filter(v => v.mimeType === FOLDER_TYPE).map(v => v.id)

|

||||||

|

subf = subf.length ? JSON.stringify(subf) : null

|

||||||

|

const exists = db.prepare('SELECT fid FROM gd WHERE fid = ?').get(fid)

|

||||||

|

if (exists) {

|

||||||

|

db.prepare('UPDATE gd SET info=?, subf=?, mtime=? WHERE fid=?')

|

||||||

|

.run(JSON.stringify(files), subf, Date.now(), fid)

|

||||||

|

} else {

|

||||||

|

db.prepare('INSERT INTO gd (fid, info, subf, ctime) VALUES (?, ?, ?, ?)')

|

||||||

|

.run(fid, JSON.stringify(files), subf, Date.now())

|

||||||

|

}

|

||||||

|

}

|

||||||

|

|

||||||

|

async function ls_folder ({ fid, not_teamdrive, service_account }) {

|

||||||

|

let files = []

|

||||||

|

let pageToken

|

||||||

|

const search_all = { includeItemsFromAllDrives: true, supportsAllDrives: true }

|

||||||

|

const params = ((fid === 'root') || not_teamdrive) ? {} : search_all

|

||||||

|

params.q = `'${fid}' in parents and trashed = false`

|

||||||

|

params.orderBy = 'folder,name desc'

|

||||||

|

params.fields = 'nextPageToken, files(id, name, mimeType, size, md5Checksum)'

|

||||||

|

params.pageSize = Math.min(PAGE_SIZE, 1000)

|

||||||

|

const use_sa = (fid !== 'root') && (service_account || !not_teamdrive) // 不带参数默认使用sa

|

||||||

|

const headers = await gen_headers(use_sa)

|

||||||

|

do {

|

||||||

|

if (pageToken) params.pageToken = pageToken

|

||||||

|

let url = 'https://www.googleapis.com/drive/v3/files'

|

||||||

|

url += '?' + params_to_query(params)

|

||||||

|

const payload = { headers, timeout: TIMEOUT_BASE }

|

||||||

|

let retry = 0

|

||||||

|

let data

|

||||||

|

while (!data && (retry < RETRY_LIMIT)) {

|

||||||

|

try {

|

||||||

|

data = (await axins.get(url, payload)).data

|

||||||

|

} catch (err) {

|

||||||

|

handle_error(err)

|

||||||

|

retry++

|

||||||

|

payload.timeout = Math.min(payload.timeout * 2, TIMEOUT_MAX)

|

||||||

|

}

|

||||||

|

}

|

||||||

|

if (!data) {

|

||||||

|

console.error('读取目录未完成(部分读取), 参数:', params)

|

||||||

|

files.not_finished = true

|

||||||

|

return files

|

||||||

|

}

|

||||||

|

files = files.concat(data.files)

|

||||||

|

pageToken = data.nextPageToken

|

||||||

|

} while (pageToken)

|

||||||

|

|

||||||

|

return files

|

||||||

|

}

|

||||||

|

|

||||||

|

async function gen_headers (use_sa) {

|

||||||

|

use_sa = use_sa && SA_TOKENS.length

|

||||||

|

const access_token = use_sa ? (await get_sa_token()).access_token : (await get_access_token())

|

||||||

|

return { authorization: 'Bearer ' + access_token }

|

||||||

|

}

|

||||||

|

|

||||||

|

function params_to_query (data) {

|

||||||

|

const ret = []

|

||||||

|

for (let d in data) {

|

||||||

|

ret.push(encodeURIComponent(d) + '=' + encodeURIComponent(data[d]))

|

||||||

|

}

|

||||||

|

return ret.join('&')

|

||||||

|

}

|

||||||

|

|

||||||

|

async function get_access_token () {

|

||||||

|

const { expires, access_token, client_id, client_secret, refresh_token } = AUTH

|

||||||

|

if (expires > Date.now()) return access_token

|

||||||

|

|

||||||

|

const url = 'https://www.googleapis.com/oauth2/v4/token'

|

||||||

|

const headers = { 'Content-Type': 'application/x-www-form-urlencoded' }

|

||||||

|

const config = { headers }

|

||||||

|

const params = { client_id, client_secret, refresh_token, grant_type: 'refresh_token' }

|

||||||

|

const { data } = await axins.post(url, params_to_query(params), config)

|

||||||

|

// console.log('Got new token:', data)

|

||||||

|

AUTH.access_token = data.access_token

|

||||||

|

AUTH.expires = Date.now() + 1000 * data.expires_in

|

||||||

|

return data.access_token

|

||||||

|

}

|

||||||

|

|

||||||

|

async function get_sa_token () {

|

||||||

|

const el = get_random_element(SA_TOKENS)

|

||||||

|

const { value, expires, gtoken } = el

|

||||||

|

// 把gtoken传递出去的原因是当某账号流量用尽时可以依此过滤

|

||||||

|

if (Date.now() < expires) return { access_token: value, gtoken }

|

||||||

|

return new Promise((resolve, reject) => {

|

||||||

|

gtoken.getToken((err, tokens) => {

|

||||||

|

if (err) {

|

||||||

|

reject(err)

|

||||||

|

} else {

|

||||||

|

// console.log('got sa token', tokens)

|

||||||

|

const { access_token, expires_in } = tokens

|

||||||

|

el.value = access_token

|

||||||

|

el.expires = Date.now() + 1000 * expires_in

|

||||||

|

resolve({ access_token, gtoken })

|

||||||

|

}

|

||||||

|

})

|

||||||

|

})

|

||||||

|

}

|

||||||

|

|

||||||

|

function get_random_element (arr) {

|

||||||

|

return arr[~~(arr.length * Math.random())]

|

||||||

|

}

|

||||||

|

|

||||||

|

function validate_fid (fid) {

|

||||||

|

if (!fid) return false

|

||||||

|

fid = String(fid)

|

||||||

|

const whitelist = ['root', 'appDataFolder', 'photos']

|

||||||

|

if (whitelist.includes(fid)) return true

|

||||||

|

if (fid.length < 10 || fid.length > 100) return false

|

||||||

|

const reg = /^[a-zA-Z0-9_-]+$/

|

||||||

|

return fid.match(reg)

|

||||||

|

}

|

||||||

|

|

||||||

|

async function create_folder (name, parent, use_sa) {

|

||||||

|

let url = `https://www.googleapis.com/drive/v3/files`

|

||||||

|

const params = { supportsAllDrives: true }

|

||||||

|

url += '?' + params_to_query(params)

|

||||||

|

const post_data = {

|

||||||

|

name,

|

||||||

|

mimeType: FOLDER_TYPE,

|

||||||

|

parents: [parent]

|

||||||

|

}

|

||||||

|

const headers = await gen_headers(use_sa)

|

||||||

|

const config = { headers }

|

||||||

|

let retry = 0

|

||||||

|

let data

|

||||||

|

while (!data && (retry < RETRY_LIMIT)) {

|

||||||

|

try {

|

||||||

|

data = (await axins.post(url, post_data, config)).data

|

||||||

|

} catch (err) {

|

||||||

|

retry++

|

||||||

|

handle_error(err)

|

||||||

|

console.log('创建目录重试中:', name, '重试次数:', retry)

|

||||||

|

}

|

||||||

|

}

|

||||||

|

return data

|

||||||

|

}

|

||||||

|

|

||||||

|

async function get_info_by_id (fid, use_sa) {

|

||||||

|

let url = `https://www.googleapis.com/drive/v3/files/${fid}`

|

||||||

|

let params = {

|

||||||

|

includeItemsFromAllDrives: true,

|

||||||

|

supportsAllDrives: true,

|

||||||

|

corpora: 'allDrives',

|

||||||

|

fields: 'id,name,owners'

|

||||||

|

}

|

||||||

|

url += '?' + params_to_query(params)

|

||||||

|

const headers = await gen_headers(use_sa)

|

||||||

|

const { data } = await axins.get(url, { headers })

|

||||||

|

return data

|

||||||

|

}

|

||||||

|

|

||||||

|

async function user_choose () {

|

||||||

|

const answer = await prompts({

|

||||||

|

type: 'select',

|

||||||

|

name: 'value',

|

||||||

|

message: '检测到上次的复制记录,是否继续?',

|

||||||

|

choices: [

|

||||||

|

{ title: 'Continue', description: '从上次中断的地方继续', value: 'continue' },

|

||||||

|

{ title: 'Restart', description: '无视已存在的记录,重新复制', value: 'restart' },

|

||||||

|

{ title: 'Exit', description: '直接退出', value: 'exit' }

|

||||||

|

],

|

||||||

|

initial: 0

|

||||||

|

})

|

||||||

|

return answer.value

|

||||||

|

}

|

||||||

|

|

||||||

|

async function copy ({ source, target, name, min_size, update, not_teamdrive, service_account, is_server }) {

|

||||||

|

target = target || DEFAULT_TARGET

|

||||||

|

if (!target) throw new Error('目标位置不能为空')

|

||||||

|

|

||||||

|

const record = db.prepare('select id, status from task where source=? and target=?').get(source, target)

|

||||||

|

if (record && record.status === 'copying') return console.log('已有相同源和目的地的任务正在运行,强制退出')

|

||||||

|

|

||||||

|

try {

|

||||||

|

return await real_copy({ source, target, name, min_size, update, not_teamdrive, service_account, is_server })

|

||||||

|

} catch (err) {

|

||||||

|

console.error('复制文件夹出错', err)

|

||||||

|

const record = db.prepare('select id, status from task where source=? and target=?').get(source, target)

|

||||||

|

if (record) db.prepare('update task set status=? where id=?').run('error', record.id)

|

||||||

|

}

|

||||||

|

}

|

||||||

|

|

||||||

|

// 待解决:如果用户手动ctrl+c中断进程,那么已经发出的请求,就算完成了也不会记录到本地数据库中,所以可能产生重复文件(夹)

|

||||||

|

async function real_copy ({ source, target, name, min_size, update, not_teamdrive, service_account, is_server }) {

|

||||||

|

async function get_new_root () {

|

||||||

|

if (name) {

|

||||||

|

return create_folder(name, target, service_account)

|

||||||

|

} else {

|

||||||

|

const source_info = await get_info_by_id(source, service_account)

|

||||||

|

return create_folder(source_info.name, target, service_account)

|

||||||

|

}

|

||||||

|

}

|

||||||

|

|

||||||

|

const record = db.prepare('select * from task where source=? and target=?').get(source, target)

|

||||||

|

if (record) {

|

||||||

|

const choice = is_server ? 'continue' : await user_choose()

|

||||||

|

if (choice === 'exit') {

|

||||||

|

return console.log('退出程序')

|

||||||

|

} else if (choice === 'continue') {

|

||||||

|

let { copied, mapping } = record

|

||||||

|

const copied_ids = {}

|

||||||

|

const old_mapping = {}

|

||||||

|

copied = copied.trim().split('\n')

|

||||||

|

copied.forEach(id => copied_ids[id] = true)

|

||||||

|

mapping = mapping.trim().split('\n').map(line => line.split(' '))

|

||||||

|

const root = mapping[0][1]

|

||||||

|

mapping.forEach(arr => old_mapping[arr[0]] = arr[1])

|

||||||

|

db.prepare('update task set status=? where id=?').run('copying', record.id)

|

||||||

|

const arr = await walk_and_save({ fid: source, update, not_teamdrive, service_account })

|

||||||

|

let files = arr.filter(v => v.mimeType !== FOLDER_TYPE).filter(v => !copied_ids[v.id])

|

||||||

|

if (min_size) files = files.filter(v => v.size >= min_size)

|

||||||

|

const folders = arr.filter(v => v.mimeType === FOLDER_TYPE).filter(v => !old_mapping[v.id])

|

||||||

|

console.log('待复制的目录数:', folders.length)

|

||||||

|

console.log('待复制的文件数:', files.length)

|

||||||

|

const all_mapping = await create_folders({

|

||||||

|

old_mapping,

|

||||||

|

source,

|

||||||

|

folders,

|

||||||

|

service_account,

|

||||||

|

root,

|

||||||

|

task_id: record.id

|

||||||

|

})

|

||||||

|

await copy_files({ files, mapping: all_mapping, root, task_id: record.id })

|

||||||

|

db.prepare('update task set status=?, ftime=? where id=?').run('finished', Date.now(), record.id)

|

||||||

|

return { id: root }

|

||||||

|

} else if (choice === 'restart') {

|

||||||

|

const new_root = await get_new_root()

|

||||||

|

if (!new_root) throw new Error('创建目录失败,请检查您的帐号是否有相应的权限')

|

||||||

|

const root_mapping = source + ' ' + new_root.id + '\n'

|

||||||

|

db.prepare('update task set status=?, copied=?, mapping=? where id=?')

|

||||||

|

.run('copying', '', root_mapping, record.id)

|

||||||

|

const arr = await walk_and_save({ fid: source, update: true, not_teamdrive, service_account })

|

||||||

|

let files = arr.filter(v => v.mimeType !== FOLDER_TYPE)

|

||||||

|

if (min_size) files = files.filter(v => v.size >= min_size)

|

||||||

|

const folders = arr.filter(v => v.mimeType === FOLDER_TYPE)

|

||||||

|

console.log('待复制的目录数:', folders.length)

|

||||||

|

console.log('待复制的文件数:', files.length)

|

||||||

|

const mapping = await create_folders({

|

||||||

|

source,

|

||||||

|

folders,

|

||||||

|

service_account,

|

||||||

|

root: new_root.id,

|

||||||

|

task_id: record.id

|

||||||

|

})

|

||||||

|

await copy_files({ files, mapping, root: new_root.id, task_id: record.id })

|

||||||

|

db.prepare('update task set status=?, ftime=? where id=?').run('finished', Date.now(), record.id)

|

||||||

|

return new_root

|

||||||

|

} else {

|

||||||

|

// ctrl+c 退出

|

||||||

|

return console.log('退出程序')

|

||||||

|

}

|

||||||

|

} else {

|

||||||

|

const new_root = await get_new_root()

|

||||||

|

if (!new_root) throw new Error('创建目录失败,请检查您的帐号是否有相应的权限')

|

||||||

|

const root_mapping = source + ' ' + new_root.id + '\n'

|

||||||

|

const { lastInsertRowid } = db.prepare('insert into task (source, target, status, mapping, ctime) values (?, ?, ?, ?, ?)').run(source, target, 'copying', root_mapping, Date.now())

|

||||||

|

const arr = await walk_and_save({ fid: source, update, not_teamdrive, service_account })

|

||||||

|

let files = arr.filter(v => v.mimeType !== FOLDER_TYPE)

|

||||||

|

if (min_size) files = files.filter(v => v.size >= min_size)

|

||||||

|

const folders = arr.filter(v => v.mimeType === FOLDER_TYPE)

|

||||||

|

console.log('待复制的目录数:', folders.length)

|

||||||

|

console.log('待复制的文件数:', files.length)

|

||||||

|

const mapping = await create_folders({

|

||||||

|

source,

|

||||||

|

folders,

|

||||||

|

service_account,

|

||||||

|

root: new_root.id,

|

||||||

|

task_id: lastInsertRowid

|

||||||

|

})

|

||||||

|

await copy_files({ files, mapping, root: new_root.id, task_id: lastInsertRowid })

|

||||||

|

db.prepare('update task set status=?, ftime=? where id=?').run('finished', Date.now(), lastInsertRowid)

|

||||||

|

return new_root

|

||||||

|

}

|

||||||

|

}

|

||||||

|

|

||||||

|

async function copy_files ({ files, mapping, root, task_id }) {

|

||||||

|

console.log('开始复制文件,总数:', files.length)

|

||||||

|

const limit = pLimit(PARALLEL_LIMIT)

|

||||||

|

let count = 0

|

||||||

|

const loop = setInterval(() => {

|

||||||

|

console.log('================')

|

||||||

|

console.log('已复制的文件数量', count)

|

||||||

|

console.log('正在进行的网络请求', limit.activeCount)

|

||||||

|

console.log('排队等候的文件数量', limit.pendingCount)

|

||||||

|

}, LOG_DELAY)

|

||||||

|

await Promise.all(files.map(async file => {

|

||||||

|

const { id, parent } = file

|

||||||

|

const target = mapping[parent] || root

|

||||||

|

const new_file = await limit(() => copy_file(id, target))

|

||||||

|

if (new_file) {

|

||||||

|

db.prepare('update task set status=?, copied = copied || ? where id=?').run('copying', id + '\n', task_id)

|

||||||

|

}

|

||||||

|

count++

|

||||||

|

}))

|

||||||

|

clearInterval(loop)

|

||||||

|

}

|

||||||

|

|

||||||

|

async function copy_file (id, parent) {

|

||||||

|

let url = `https://www.googleapis.com/drive/v3/files/${id}/copy`

|

||||||

|

let params = { supportsAllDrives: true }

|

||||||

|

url += '?' + params_to_query(params)

|

||||||

|

const config = {}

|

||||||

|

let retry = 0

|

||||||

|

while (retry < RETRY_LIMIT) {

|

||||||

|

let gtoken

|

||||||

|

if (SA_TOKENS.length) { // 如果有sa文件则优先使用

|

||||||

|

const temp = await get_sa_token()

|

||||||

|

gtoken = temp.gtoken

|

||||||

|

config.headers = { authorization: 'Bearer ' + temp.access_token }

|

||||||

|

} else {

|

||||||

|

config.headers = await gen_headers()

|

||||||

|

}

|

||||||

|

try {

|

||||||

|

const { data } = await axins.post(url, { parents: [parent] }, config)

|

||||||

|

return data

|

||||||

|

} catch (err) {

|

||||||

|

retry++

|

||||||

|

handle_error(err)

|

||||||

|

const data = err && err.response && err.response.data

|

||||||

|

const message = data && data.error && data.error.message

|

||||||

|

if (message && message.toLowerCase().includes('rate limit')) {

|

||||||

|

SA_TOKENS = SA_TOKENS.filter(v => v.gtoken !== gtoken)

|

||||||

|

console.log('此帐号触发使用限额,剩余可用service account帐号数量:', SA_TOKENS.length)

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

if (!SA_TOKENS.length) {

|

||||||

|

throw new Error('所有SA帐号流量已用完')

|

||||||

|

} else {

|

||||||

|

console.warn('复制文件失败,文件id: ' + id)

|

||||||

|

}

|

||||||

|

}

|

||||||

|

|

||||||

|

async function create_folders ({ source, old_mapping, folders, root, task_id, service_account }) {

|

||||||

|

if (!Array.isArray(folders)) throw new Error('folders must be Array:' + folders)

|

||||||

|

const mapping = old_mapping || {}

|

||||||

|

mapping[source] = root

|

||||||

|

if (!folders.length) return mapping

|

||||||

|

|

||||||

|

console.log('开始复制文件夹,总数:', folders.length)

|

||||||

|

const limit = pLimit(PARALLEL_LIMIT)

|

||||||

|

let count = 0

|

||||||

|

let same_levels = folders.filter(v => v.parent === folders[0].parent)

|

||||||

|

|

||||||

|

const loop = setInterval(() => {

|

||||||

|

console.log('================')

|

||||||

|

console.log('已创建的文件夹数量', count)

|

||||||

|

console.log('正在进行的网络请求', limit.activeCount)

|

||||||

|

console.log('排队等候的目录数量', limit.pendingCount)

|

||||||

|

}, LOG_DELAY)

|

||||||

|

|

||||||

|

while (same_levels.length) {

|

||||||

|

await Promise.all(same_levels.map(async v => {

|

||||||

|

const { name, id, parent } = v

|

||||||

|

const target = mapping[parent] || root

|

||||||

|

const new_folder = await limit(() => create_folder(name, target, service_account))

|

||||||

|

count++

|

||||||

|

mapping[id] = new_folder.id

|

||||||

|

const mapping_record = id + ' ' + new_folder.id + '\n'

|

||||||

|

db.prepare('update task set status=?, mapping = mapping || ? where id=?').run('copying', mapping_record, task_id)

|

||||||

|

}))

|

||||||

|

folders = folders.filter(v => !mapping[v.id])

|

||||||

|

same_levels = [].concat(...same_levels.map(v => folders.filter(vv => vv.parent === v.id)))

|

||||||

|

}

|

||||||

|

|

||||||

|

clearInterval(loop)

|

||||||

|

return mapping

|

||||||

|

}

|

||||||

|

|

||||||

|

function find_dupe (arr) {

|

||||||

|

const files = arr.filter(v => v.mimeType !== FOLDER_TYPE)

|

||||||

|

const folders = arr.filter(v => v.mimeType === FOLDER_TYPE)

|

||||||

|

const exists = {}

|

||||||

|

const dupe_files = []

|

||||||

|

const dupe_folder_keys = {}

|

||||||

|

for (const folder of folders) {

|

||||||

|

const { parent, name } = folder

|

||||||

|

const key = parent + '|' + name

|

||||||

|

if (exists[key]) {

|

||||||

|

dupe_folder_keys[key] = true

|

||||||

|

} else {

|

||||||

|

exists[key] = true

|

||||||

|

}

|

||||||

|

}

|

||||||

|

const dupe_empty_folders = folders.filter(folder => {

|

||||||

|

const { parent, name } = folder

|

||||||

|

const key = parent + '|' + name

|

||||||

|

return dupe_folder_keys[key]

|

||||||

|

}).filter(folder => {

|

||||||

|

const has_child = arr.some(v => v.parent === folder.id)

|

||||||

|

return !has_child

|

||||||

|

})

|

||||||

|

for (const file of files) {

|

||||||

|

const { md5Checksum, parent, name } = file

|

||||||

|

// 根据文件位置和md5值来判断是否重复

|

||||||

|

const key = parent + '|' + md5Checksum // + '|' + name

|

||||||

|

if (exists[key]) {

|

||||||

|

dupe_files.push(file)

|

||||||

|

} else {

|

||||||

|

exists[key] = true

|

||||||

|

}

|

||||||

|

}

|

||||||

|

return dupe_files.concat(dupe_empty_folders)

|

||||||

|

}

|

||||||

|

|

||||||

|

async function confirm_dedupe ({ file_number, folder_number }) {

|

||||||

|

const answer = await prompts({

|

||||||

|

type: 'select',

|

||||||

|

name: 'value',

|

||||||

|

message: `检测到重复文件${file_number}个,重复目录${folder_number}个,是否删除?`,

|

||||||

|

choices: [

|

||||||

|

{ title: 'Yes', description: '确认删除', value: 'yes' },

|

||||||

|

{ title: 'No', description: '先不删除', value: 'no' }

|

||||||

|

],

|

||||||

|

initial: 0

|

||||||

|

})

|

||||||

|

return answer.value

|

||||||

|

}

|

||||||

|

|

||||||

|

// 可以删除文件或文件夹,似乎不会进入回收站

|

||||||

|

async function rm_file ({ fid, service_account }) {

|

||||||

|

const headers = await gen_headers(service_account)

|

||||||

|

let retry = 0

|

||||||

|

const url = `https://www.googleapis.com/drive/v3/files/${fid}?supportsAllDrives=true`

|

||||||

|

while (retry < RETRY_LIMIT) {

|

||||||

|

try {

|

||||||

|

return await axins.delete(url, { headers })

|

||||||

|

} catch (err) {

|

||||||

|

retry++

|

||||||

|

handle_error(err)

|

||||||

|

console.log('删除重试中,重试次数', retry)

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

|

||||||

|

async function dedupe ({ fid, update, service_account }) {

|

||||||

|

let arr

|

||||||

|

if (!update) {

|

||||||

|

const info = get_all_by_fid(fid)

|

||||||

|

if (info) {

|

||||||

|

console.log('找到本地缓存数据,缓存时间:', dayjs(info.mtime).format('YYYY-MM-DD HH:mm:ss'))

|

||||||

|

arr = info

|

||||||

|

}

|

||||||

|

}

|

||||||

|

arr = arr || await walk_and_save({ fid, update, service_account })

|

||||||

|

const dupes = find_dupe(arr)

|

||||||

|

const folder_number = dupes.filter(v => v.mimeType === FOLDER_TYPE).length

|

||||||

|

const file_number = dupes.length - folder_number

|

||||||

|

const choice = await confirm_dedupe({ file_number, folder_number })

|

||||||

|

if (choice === 'no') {

|

||||||

|

return console.log('退出程序')

|

||||||

|

} else if (!choice) {

|

||||||

|

return // ctrl+c

|

||||||

|

}

|

||||||

|

const limit = pLimit(PARALLEL_LIMIT)

|

||||||

|

let folder_count = 0

|

||||||

|

let file_count = 0

|

||||||

|

await Promise.all(dupes.map(async v => {

|

||||||

|

try {

|

||||||

|

await limit(() => rm_file({ fid: v.id, service_account }))

|

||||||

|

if (v.mimeType === FOLDER_TYPE) {

|

||||||

|

console.log('成功删除文件夹', v.name)

|

||||||

|

folder_count++

|

||||||

|

} else {

|

||||||

|

console.log('成功删除文件', v.name)

|

||||||

|

file_count++

|

||||||

|

}

|

||||||

|

} catch (e) {

|

||||||

|

console.log('删除失败', e.message)

|

||||||

|

}

|

||||||

|

}))

|

||||||

|

return { file_count, folder_count }

|

||||||

|

}

|

||||||

|

|

||||||

|

function handle_error (err) {

|

||||||

|

const data = err && err.response && err.response.data

|

||||||

|

data ? console.error(JSON.stringify(data)) : console.error(err.message)

|

||||||

|

}

|

||||||

|

|

||||||

|

module.exports = { ls_folder, count, validate_fid, copy, dedupe, copy_file, gen_count_body, real_copy }

|

||||||

113

src/router.js

Normal file

113

src/router.js

Normal file

@ -0,0 +1,113 @@

|

|||||||

|

const Router = require('@koa/router')

|

||||||

|

|

||||||

|

const { db } = require('../db')

|

||||||

|

const { validate_fid, gen_count_body } = require('./gd')

|

||||||

|

const { send_count, send_help, send_choice, send_task_info, sm, extract_fid, reply_cb_query, tg_copy, send_all_tasks } = require('./tg')

|

||||||

|

|

||||||

|

const { AUTH } = require('../config')

|

||||||

|

const { tg_whitelist } = AUTH

|

||||||

|

|

||||||

|

const counting = {}

|

||||||

|

const router = new Router()

|

||||||

|

|

||||||

|

router.get('/api/gdurl/count', async ctx => {

|

||||||

|

const { query, headers } = ctx.request

|

||||||

|

let { fid, type, update } = query

|

||||||

|

if (!validate_fid(fid)) throw new Error('无效的分享ID')

|

||||||

|

let ua = headers['user-agent'] || ''

|

||||||

|

ua = ua.toLowerCase()

|

||||||

|

type = (type || '').toLowerCase()

|

||||||

|

if (!type) {

|

||||||

|

if (ua.includes('curl')) {

|

||||||

|

type = 'curl'

|

||||||

|

} else if (ua.includes('mozilla')) {

|

||||||

|

type = 'html'

|

||||||

|

} else {

|

||||||

|

type = 'json'

|

||||||

|

}

|

||||||

|

}

|

||||||

|

if (type === 'html') {

|

||||||

|

ctx.set('Content-Type', 'text/html; charset=utf-8')

|

||||||

|

} else if (['json', 'all'].includes(type)) {

|

||||||

|

ctx.set('Content-Type', 'application/json; charset=UTF-8')

|

||||||

|

}

|

||||||

|

ctx.body = await gen_count_body({ fid, type, update, service_account: true })

|

||||||

|

})

|

||||||

|

|

||||||

|

router.post('/api/gdurl/tgbot', async ctx => {

|

||||||

|

const { body } = ctx.request

|

||||||

|

console.log('ctx.ip', ctx.ip) // 可以只允许tg服务器的ip

|